I am increasingly worried about the newest trend of using the AI in order to get grammatical explanations. The problem is that Chat GPT is not a tool from sci-fi movies - it does not do a proper research, it does not consult grammar books and dictionaries, etc. It only produces something that looks very convincing on the surface, but very often has nothing to do with facts. What I am seeing (not only on this forum) is more and more cases of spreading misinformation that Chat GPT produced, with people trusting that they are getting reliable information. Sometimes it’s in good faith, when people honestly ask whether what they got from the AI is correct, but there are also cases of people who just mindlessly copy and paste this misinformation without any disclaimers or warning, as if they were natives offering their expertise. This is very dangerous and I think it should be seriously addressed, before the “Sentence discussions” forum becomes a place where no one can tell anymore whether what they just read came from someone who knows what they are talking about, or from an algorithm that made everything up. It is especially serious when it comes to less popular languages - just as an experiment, I asked Chat GPT about few sentences in Polish (my mother tongue), which I made grammatically incorrect on purpose. Not only it didn’t correct the actual mistakes, it even added new ones and still made it look very convincing for someone who is not advanced enough to spot them. I really think it’s better to act now, before it’s too late - in my opinion it’s totally ok to ask on the forum if what Chat GPT generated is correct, but just posting it as if it was an additional commentary to the sentences should be strongly discouraged, if not banned.

Just as an aside, equally worrying, another excellent site allows students to correct one another to earn points. The number of times one sees “Perfect!” in answer to a clearly incorrect English exercise, just to gain another point, is worrying. Adding correct corrections is tiring, I’ve more or less given up as points seem to be more rewarding than proper learning. A relief to come back to ClozeM where the Italian course is mostly spot-on, and if not we ask on the forum.

I enjoyed reading your post. Happy new year!

this is a very valid concern and i completely agree

Today I had to correct yet again some AI misinformation that has been mindlessly shared without any comment. The problem is that writing a post with corrections takes time, while just copying and pasting from Chat GPT takes few seconds. If this becomes a norm, I wouldn’t count on people willing to offer so much of their free time to correct this misinformation. I already noticed a worrying thing - when I do my daily Clozemaster routine and see that a sentence has some comments, I of course check them, but the first thing I look at is who posted them. By now I already know that comments from certain users are worthless, because they are just copying and pasting from Chat GPT. @mike, is this really what we want the “Sentence discussions” to turn into?

Thanks for the post! I appreciate the concern, and we need to give it more thought. I also want to reference Please consider removing the Explain feature entirely which voices related concerns.

I do want to note that the comments you’ve mentioned me on do explicitly mention that what they’ve posted is from ChatGPT, for example Είσαι σε όλα τέλειος., which seems rather reasonable.

As far as whether we should try having someone moderate the forum to try to censor/remove content from ChatGPT, that we’ll have to give more thought. Perhaps we can try to discourage it with some sort of messaging as a first step, and/or encourage people to put that sort of content in their sentence notes which are not shared / publicly visible.

That said, and to your point, ChatGPT content does seem to be ubiquitous, and at least the comments I’ve seen so far explicitly say they’re from ChatGPT so you know to double check it (or ignore it in your case). Without mentioning that, for example just copy/pasting the output, I’m not sure how we’d moderate it at all - try integrating something like GPT Zero? So my initial thought is that at least including that a particular comment is from ChatGPT is something that we should also encourage.

Do you have any particular solutions in mind? How would you expect a ban to be enforced?

I fear that won’t help. People who mindlessly believe and spread garbage information are … well … mindless. Some message they can ignore won’t discourage mindless people.

I think that (at least a portion of) these offenders comment ChatGPT’s output precisely because their text will be publicly visible. Maybe they crave the sense of validation they hope they’ll get from doing this. I don’t know how their brains work. Again, I’m talking only about a portion of the offenders. For some it might help, but I imagine that others get a feeling of superiority from the knowledge that they can use this new technology (which isn’t hard to use and everybody else is also able to use it, but that part they shut out), and they know they won’t get that feeling if others don’t see proof of them using ChatGPT. In other words, them posting their comments publicly visible. Ergo, I fear that approach won’t help all too much (at least not as much as desired or necessary).

I understand I’m being negative. I only say that/why potential solutions likely won’t work, I don’t propose solutions. Unfortunately, I don’t have a solution. It’s a difficult problem.

@mike thanks a lot for being so open minded about that! I totally understand that it’s not easy to come up with workable solutions to this problem, since it’s an entirely new kind of challenge that we are (and will be) dealing with. I am also far from calling for banning the AI, because we all know that’s unrealistic. Nevertheless, I strongly believe this has to be discussed, before this problem gets out of control and the reputation of Clozemaster suffers.

One solution I would propose is to have two sub-sections in every main “Sentences discussions” section for each language: one for the AI explanations and one for the actual discussions. Please note that the user I was referring to and in whose posts I tagged you is obviously not interested in any kind of discussion - they have never reacted in any way to me pointing out that what they posted is incorrect and they never corrected any of their posts in this regard. So their misinformation will stay there forever. Yes, they do mention that it’s from Chat GPT, but in my opinion this is not enough - I’d like to see a clear statement just like from the very AI they use, something like “These are AI generated explanations. Because they are not based on any critical research, they might be incorrect or misleading, so you’re strongly encouraged to consult other sources”. I imagine some people might find this a bit exaggerated, but in my opinion we have some obligations here: if it was a forum for fan fiction or cooking recipes, that would be totally ok. But I believe the environment we strive to create here is of an educational nature, so any hints of misinformation should be pointed out and taken care of seriously.

The advantages of having the separate sub-sections for both the AI explanations and discussions between actual humans:

- people who care more about feeling validated than actually learning the language (see @davidculley 's points) would still have their safe space here, hopefully they would stick to it and those of us who are not interested in their AI generated content will have an easy way of ignoring it

- no one will feel like they are being censored or targeted for “just trying to help”, I do understand that many people do it in good faith, without fully understanding the tools they are using

- the AI sub-forum could have an automatic default disclaimer I mentioned above, always appearing above these explanations. To be honest, I thing something like this should also appear in the AI explanations offered by Clozemaster, because I also saw a lot of incorrect information there.

- if someone posts their AI generated content in the wrong section of the forum, admins can easily and quickly move it to the correct one

I’m very curious to hear what are your thoughts!

In the meantime I was informed that my post in another topic has been hidden, because “the community feels it is not a good fit for the topic”. In my post I asked if the newly introduced feature (discussed in this very topic) could be also implemented in the app, instead of only in the web version. While the reasons for such question being “not a good fit for the topic” are beyond my understanding, the actual reason I am mentioning it is that I would like to ask for a clarification - how does flagging a post work? If I flag a post I already demonstrated to be factually wrong, incorrect and misleading, will it also be hidden?

That ban seems completely unfair and unwarranted.

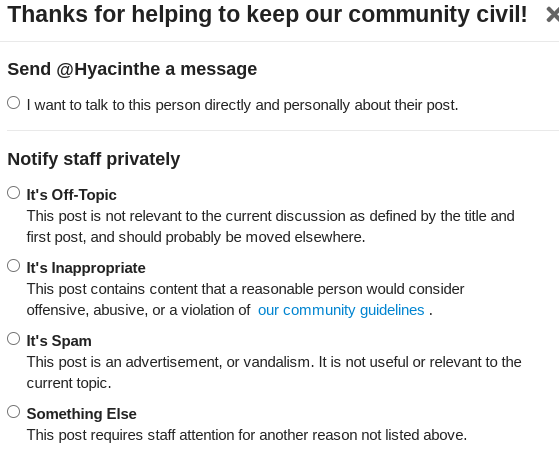

Here are a few screenshots to show how it’s done. In the first picture, you click on the “…” icon to expand it to a flag, which brings up a number of options.

![]()

![]()

Click on the flag to get this dialog:

My guess is that someone chose the “It’s Inappropriate” option.

I have never used any of the banning options, but I have assumed that any proposed ban would not be applied immediately, only after staff intervention. I fail to see how any staff person would agree that your post was inappropriate, though.

AI misinformation is now a worldwide social problem and will become an increasingly pernicious epidemic in every sphere of life every place on earth. It already corrodes our most important institutions with no tenable solution in sight. If the Gutenberg press is any guide, this will result in decades if not centuries of social fracture and conflict before people learn to manage it. This is a good opportunity to practice your equanimity so you’ll be prepared when it painfully and senselessly disrupts your employment, politics, and personal relationships, and creates a dead internet where trust is impossible. Cheers! ;-p

A little update: during the past few days, several of my posts have been hidden, then made visible again, then hidden again, I also got a message telling me which sentences exactly I should remove in order to have my post visible again, all that because of asking additional questions that were around, but not 100% about the topic discussed. That’s totally ok, no hard feelings, I understand that rules are rules. But at the same time, as an experiment, few days ago I flagged a post containing a serious case of misinformation, which I clearly demonstrated. Absolutely nothing happened to it, it’s still visible to every learner who will have the misfortune of stumbling upon it. This tells me clearly that fighting misinformation is very low on the priorities list, while hiding and unhiding posts because of not sticking to the topic is a top priority, since so much time and effort has been spent on it, while nothing has been done about the flagged post. In this case I will follow @seveer 's wise advice and stop being bothered by it and wasting my time on posting corrections. It is a pity, though. I really hope one day this issue will receive more attention. I wish all of you happy learning, free of AI mistakes! ![]()

That shouldn’t/can’t be the case, since it’s an automatic process. There’s no human involved.

Do you have a link to that post?

It’s an automatic process that treats every case equally. There is no human involved. So it’s inaccurate to speak of high and low priority and unequal treatment.

It’s an automated process. There is no human involved.

Again, it’s an automatic process that gives every flagging the same attention. Also, it has nothing to do with Clozemaster. It’s a feature of Discourse, the forum software that Clozemaster uses.

That’s the definition of off-topic.

You were informed which parts of your posts are off-topic and you were informed, that if you remove the off-topic parts of your posts (and optionally copy&paste them into a new, separate thread), your posts will become visible again. Which they did, per your admission. So, isn’t all well again? And isn’t the forum in a better state now, since a few posts that were previously off-topic are now on-topic?

You made some comments that had nothing to do with the topic, edited them to make them on-topic, and your comments were restored. I don’t see a problem here. It’s all an automated process. There’s no human involved who doesn’t value or deprioritizes your contributions.

Reading over this thread, since it was said that AI generated posts are automatically marked, maybe the best approach would be to “collapse” such posts by default, with the option to “expand” them and read what the robots spat out after chewing up the internet. (The format should probably differ from the “ignored comment” formatting.) At the very least, it would save people like me some scrolling time when we want to see others’ thoughts on a matter. I’d rather not wade through what will, in all likelihood, prove to be verbose silliness from a machine that doesn’t really understand what words mean or how they work.

Warning, this is highly Off-Topic: I tried to message @Hyacinthe, encouraging them to not give up posting corrections and making this a better place. Unfortunately, it seems that the user isn’t accepting any messages (I assume because of the here-mentioned incidents), so I’m posting here instead, hoping the message to come through.

Back to the topic: Here’s an interesting comment from a professor of mathematics:

The era of ChatGPT is kind of horrifying for me as an instructor of mathematics… Not because I am worried students will use it to cheat (I don’t care! All the worse for them!), but rather because many students may try to use it to learn.

For example, imagine that I give a proof in lecture and it is just a bit too breezy for a student (or, similarly, they find such a proof in a textbook). They don’t understand it, so they ask ChatGPT to reproduce it for them, and they ask followup questions to the LLM as they go.

I experimented with this today, on a basic result in elementary number theory, and the results were disastrous… ChatGPT sent me on five different wild goose-chases with subtle and plausible-sounding intermediate claims that were just false. Every time I responded with “Hmm, but I don’t think it is true that [XXX]”, the LLM responded with something like “You are right to point out this error, thank you. It is indeed not true that [XXX], but nonetheless the overall proof strategy remains valid, because we can […further gish-gallop containing subtle and plausible-sounding claims that happen to be false].”

I know enough to be able to pinpoint these false claims relatively quickly, but my students will probably not. They’ll instead see them as valid steps that they can perform in their own proofs.

I see so many adults and professionals talking about how they are using LLMs to deepen their understanding of things, but I think this ultimately dives headlong into the “Gell-Mann amnesia” effect — these people think they are learning, but it only feels that way because [they] are ignorant enough about the topic they’re interested in to not detect that they are being fed utter [B.S.].

[…]

Exactly this unwelcome effect also happens when learning a language. Here’s an example where ChatGPT confidently sent me down the wrong path when it was asked about Spanish grammar, with regard to a sentence that contained a grammatical error:

Because I’m not very good at Spanish (yet), I couldn’t detect myself that I was fed B.S., unlike the professor in the comment. It was only thanks to @morbrorper that I realized that the answer given by ChatGPT (and the Spanish sentence in question) was wrong.

Before wading into a debate like this one, it’s important to wrap your head around how astoundingly bad AI is at trying to predict ANYTHING, or to extrapolate even one hair’s breadth beyond the reach of an existing dataset.

It’s hilarious how stupidly optimistic most people are about this stuff—and have been, throughout the history that led up to it. Even the insiders. (Especially the insiders.)

In the '60s, computer geeks in the U.S. military predicted that machine translation would be able to match human translators by 1980 (![]() ).

).

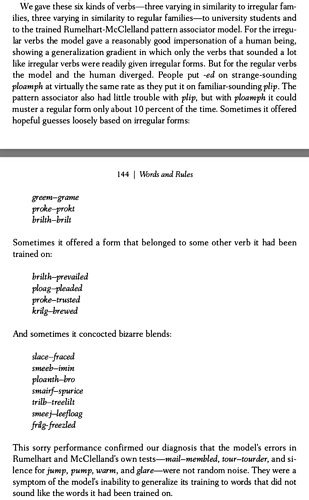

That hubris was gone by the '80s, but was replaced by an equally derpy confidence that computers could be programmed easily enough to actually LEARN grammar (![]() ) and correctly PREDICT the most probabilistically likely forms of words that hadn’t been explicitly written into their databases (

) and correctly PREDICT the most probabilistically likely forms of words that hadn’t been explicitly written into their databases (![]()

![]() ).

).

That went about as well as you might think. As Steven Pinker told it in Words and Rules (published in 1995):

That was over thirty years ago, but pretty much every later attempt to write rules-based code to predict grammar ended up flopping just as hilariously badly as this one did (often far worse).

…and then the attempts slowly faded away.

By the early 2010s, worldwide computer science, biology, neuroscience and linguistics had more-or-less entirely let go of any hope of being able to figure out (i.e., reverse-engineer) human language or general brain function.

It’s rare for anybody to even flirt with the idea of actually UNDERSTANDING HOW these things fundamentally work, below surface-level descriptions, anymore. In the current era, we just throw more and more computing power at simulations of these things whose workings we still don’t understand.

AI translation pretty much works like Google Translate—which “understands” (is hardcoded with) very little, or more likely nothing at all, about how sentences are actually written in any language. (The pre-1995 computer model in the Pinker excerpt above performed hilariously badly, but it could at least TRY to take non-words and “guess” how they would work. Google Translate in 2025 is completely incapable of doing any operations with, or making any predictions about, non-words.)

Instead of trying to actually DO stuff, these models just analyze datasets of absolutely ungodly size, crunch the numbers, and spit out whatever happens to be the highest-probability outcome.

(Think how search engines generate “predictive search strings”—that list of Possible Ways You Might Want To Write The Rest Of This Line. Basically all generative AI worldwide is pretty much just THAT, except using other kinds of data rather than text.)

To make things worse, all existing AI models route ALL translations through English, regardless of the start and end languages… so, translations like Italian <—> Spanish or Russian <—> Polish, which ordinary common sense would expect to be quite good (since those language pairs are extremely close cousins), end up being a complete crap show because they’re actually Italian-English-Spanish and Russian-English-Polish. ![]()

As far as brain function more generally, nobody is on a quest to understand HOW the brain works (on a neuron by neuron level) anymore. Now, people are just trying to build synthetic brains—without a clue what the hell is going on inside them—and just let them do their thang… either with quantum computer parts, or with “brain organoids” in a lab (do NOT google this if you are easily creeped out).

Which brings us to today’s AI.

It understands nothing, and its basic tendency is to do things not only badly, but even worse over time unless outside programmers keep stepping in, either to force the outputs inside artificial guardrails or to embed entire heaps of commercial code—LOTS of which is stolen intellectual property—into the models (or both).

The “even worse over time” part—which isn’t limited to obscure advanced stuff; we’re talking, like, simple arithmetic—happens because, when AI is given datasets to train on, it alws trains itself on the ENTIRE dataset.

That probably sounds obvious… but that’s the ENTIRE entire dataset, even including grievously flagrant statistical outliers, and also including every error, mistake, or deliberately wrong thing in the original data, no matter how godawful. (AIs have no capacity to reject original training data.)

If you’ve ever taken a statistics class, you’ll know that outliers have a MUCH bigger effect on models than “typical” datapoints do. You’ve probably seen problems in which you have a regression line based on hundreds of data points, and then adding ONE crazy-ass datapoint way off in the corner of the plot is enough to flip the entire regression line upside-down.

Well, that’s pretty much 2020s AI for ya—except that understates the problem, because AIs keep RE-training themselves on their own outputs. When those data points include a bunch of outliers based on outliers based on outliers based on outliers based on ···, you can probably guess the end result.

So… stay skeptical, lol.

But WITH proper skepticism—and a willingness to search more widely for, and/or ask fluent speakers (if you know any) about, words where you’re feeling “eeeeehhhh” about the auto-explanations—the GPT explanations can be a valuable learning tool.

Personally, I don’t want to see any ai copypasta in the sentence comments. It’s noise. I find the ai useful occasionally, but I can press the button myself if I want to.

Ah, your last para, you took the words right out of my mouth; -) lol