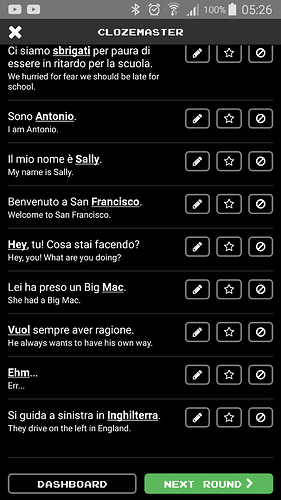

This may just be a coincidence, but the quality in questions this morning fell off a cliff. For example:

Out of 10 questions, 6 were arguably useless:

- Antonio you could argue either way; at least it’s an Italian name.

- Sally on the other hand is an English name. I think I have a handle on English names.

- San Francisco, similarly, is nothing more than the standard pronunciation and spelling of an American city.

- “Hey” is an onomatopoeic word (so… not REALLY the sort of thing that I need to learn) and in any case is usually written in Italian as “Ehi!”, not the English “Hey”.

- Big Mac? I need to learn “Big Mac” in Italian, which is pretty much identical to English except for a slight variation in pronunciation? Seriously? If you are travelling in Italy, where there is ITALIAN food, you’re hardly likely to need those two words. Ever.

- Ehm/Err. Again I don’t think there is much to be gained by typing one of the multiple possible spellings of a noise that is one step above a grunt.

As a side note, because I had no way of knowing which spelling was sought for that last one I switched to multiple choice. Once I did so I could no longer type the entry. (Android app.) Why would I want to? Because you’re more likely to retain information if you have to put in more effort than clicking a button. The ability to enter text after switching to multiple choice still works on the web, though.

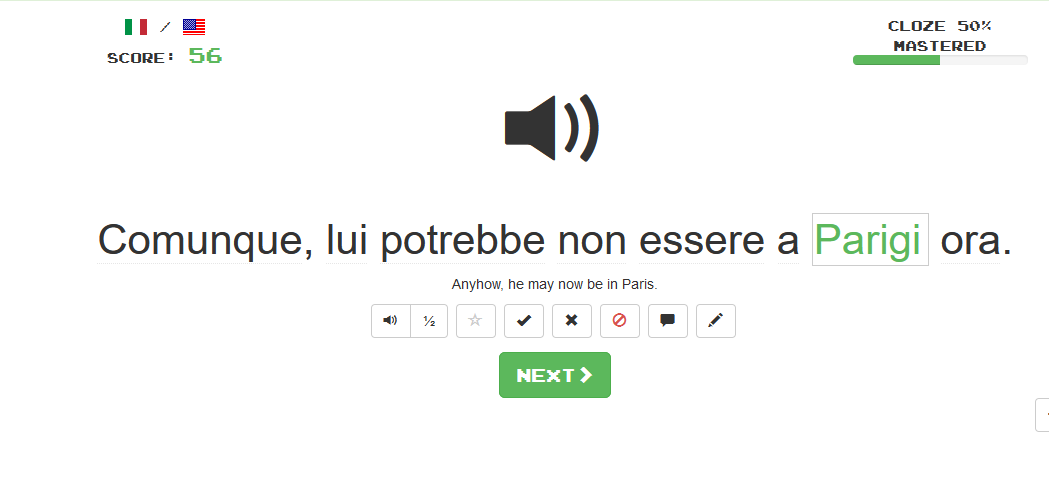

In my second run, the level of the questions wasn’t as bad (though I question whether “America” is useful as a missing word, but you could argue that either way). On the other hand there was this:

Where the translation was the exact opposite of the sentence. (I reported it.)

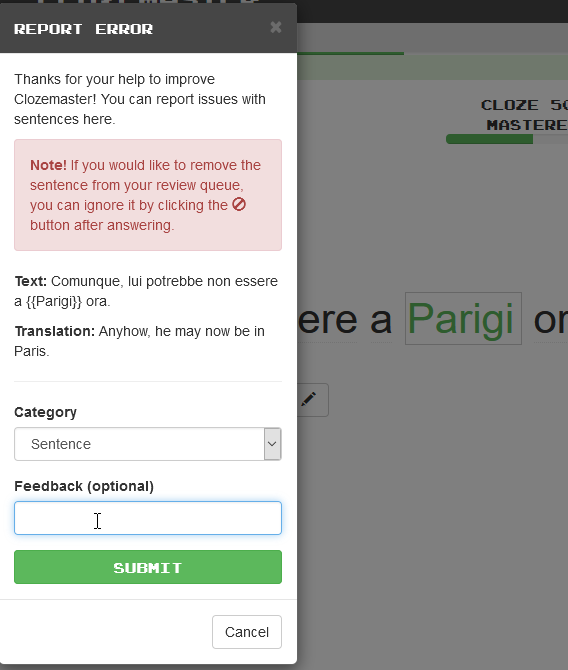

Speaking of which, I may be misremembering but I thought that the report error dialog used to have more than a “letter box slot” text box to type in the problem. (I could be wrong, it’s been a while since I’ve had to do one.)

It’s not a critical issue but it does make it more difficult to type out the details of the problem.

I noted that there has been SOME change to the questions. (The removal of those ridiculously long ones that you needed to listen to for 5 minutes and then have to re-read anyway because you can’t remember the whole sentence is something that I wholeheartedly endorse.) However I don’t know whether this is a systemic issue or just bad luck.